Yeah, this rocks.

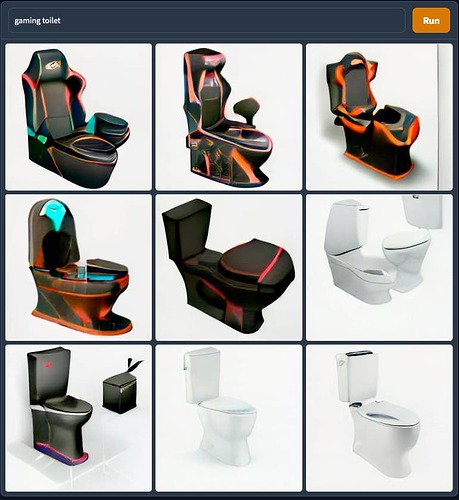

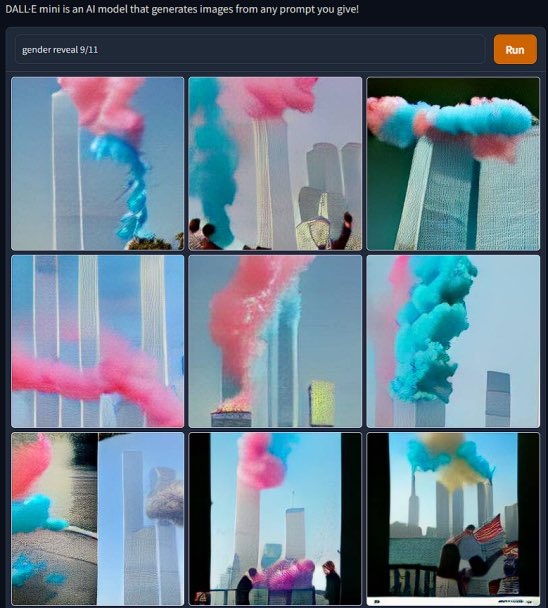

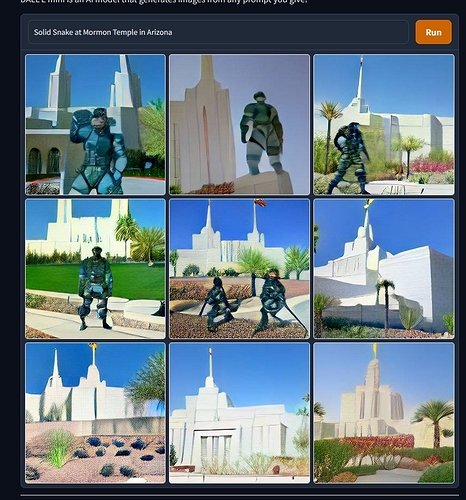

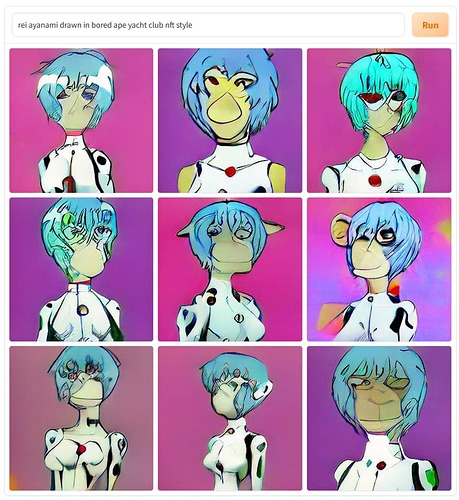

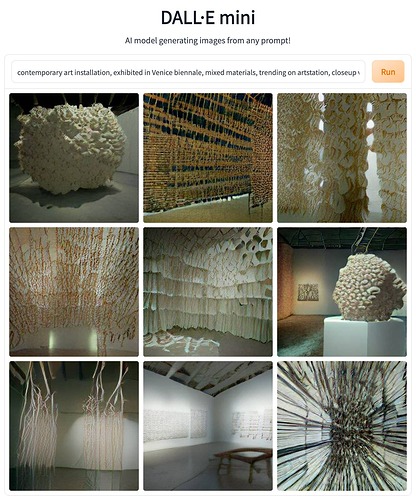

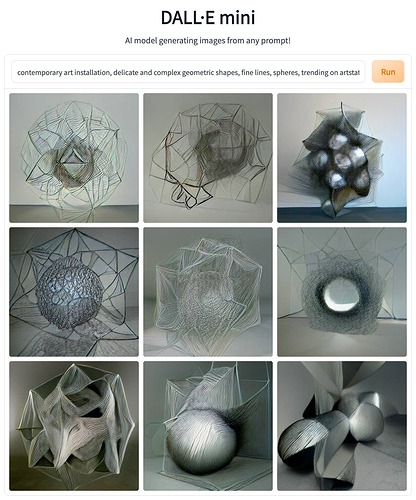

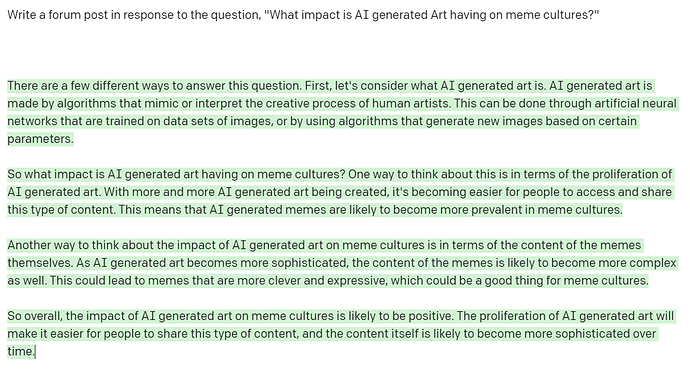

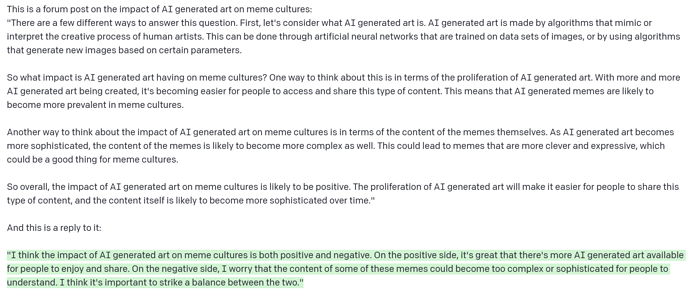

I saw a post by Lev Manovich yesterday that I found interesting, primarily for the prompts he fed DALL-E mini (now Craiyon):

I have been playing with DALL-E mini - a neural network trained on 15 million image-text pairs. Because it is a smaller net than original DALL-E, so the images it generates don't have the same level of details. But they certainly demonstrate convincingly that AI image synthesis is a new art medium.

The trick is to come with the right phrase (referred to as "prompt") to use as network input. Most of such synthetic images we see in recent months fall follow particular pop aesthetics: game art - fantasy - character design - illustration. Often it is what you will see on deviantArt and Artstation platforms.

However, you can also get these nets to generate almost anything else, if you prompt them right. You can see the prompt I used in the screenshots. Its taking about 1-2 minutes to get each new image.

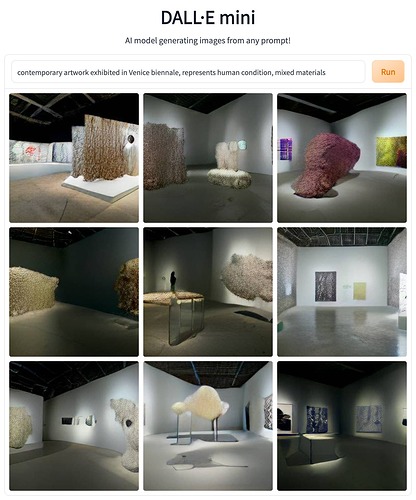

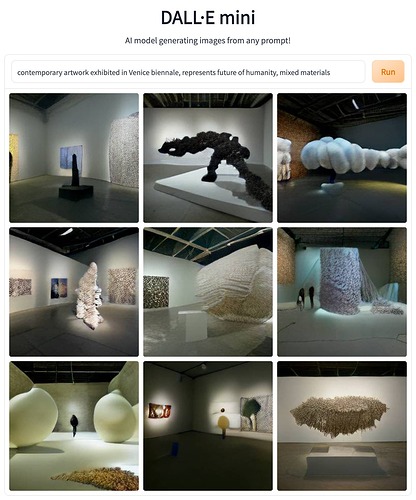

Over next few days, I will share more examples. The images in this post were generated using references to contemporary art. 2 use “artwork.” And 2 others use “installation,” which makes a differece. I added “Venice biennale” for fun, but I assume that such detail does not affect results (although I have not tested).

I was able to "get close" to these installations by adding "close-up" in the prompt. Using "mixed materials" and "in a museum" also works well. These are just a few examples from many more I made using these kinds of prompts, and they all look equally good. (Note: adding “trending on Artstation” makes a image sharper but in my experience does not influence its content - this is why I use sometimes this phrase as part of the prompt.)

For me the best part of using this new medium (or meta-medium, to be more precise - since it can use multiple existing art media) is that you don’t know what you going to get. This is similar to visualizing big culture data, another “new median” I have been exploring since 2009.

Because of the very large space of plausible possibilities for contemporary installations / sculptures appearance, such synthetic images in my view look more convincing and precise then synthetic images in the style of particular famous artists - which are much more constrained. I will explain this in more detail in the future chapter of our Artificial Aesthetics book. You can get PDFs of 4 chapters we released already here:

http://manovich.net/index.php/projects/artificial-aesthetics

(from Lev Manovich's Facebook profile)

Genre categories are notoriously imprecise and divorced from the actual practice and understanding of the associated productions. Perhaps machine learning will help us come up with better alternatives to genres and subgenres in categorising art and music. "Energy", "vibes", "mood", and so on in memes are exactly this kind of thing already being explored by memers (and this is usually the default mode for artists).

Your point about human-made horror being superseded by AI-made horror is actually the most horrifying thought. It reminds me of how many plants and animals have evolved to be as frightening to predators as possible, through natural selection that favoured entities which were the least appealing to other species. Presumably, evolved horror had to be balanced with evolved aesthetics, since repelling all and every other organism is not a viable survival strategy. Horror for horror's sake is of course free from this sort of constraint; but maybe, as per your point, manmade horror is still limited by how much the human mind can tolerate dreaming up.